Which hash type represents an individual data chunk processed during an EMC Avamar backup?

- A. Atomic

- B. Root

- C. Composite

- D. Metadata

Answer : A

Explanation:

For each file that is backed up in an EMC Avamar system, how many total bytes are added to the file cache?

- A. 20

- B. 24

- C. 40

- D. 44

Answer : D

The most important thing to do on a client with so many files is to make sure that the file cache is sized appropriately. The file cache is responsible for the vast majority (>90%) of the performance of the vamar client. If there's a file cache miss, the client has to go and thrash your disk for a while chunking up a file that may already be on the server.

So how to tune the file cache size?

The file cache starts at 22MB in size and doubles in size each time it grows. Each file on a client will use 44 bytes of space in the file cache (two SHA-1 hashes consuming 20 bytes each and 4 bytes of metadata). For 25 million files, the client will generate just over 1GB of cache data. http://jslabonte.blogspot.com/2013/08/avamar-and-large-dataset-with-multiples.html

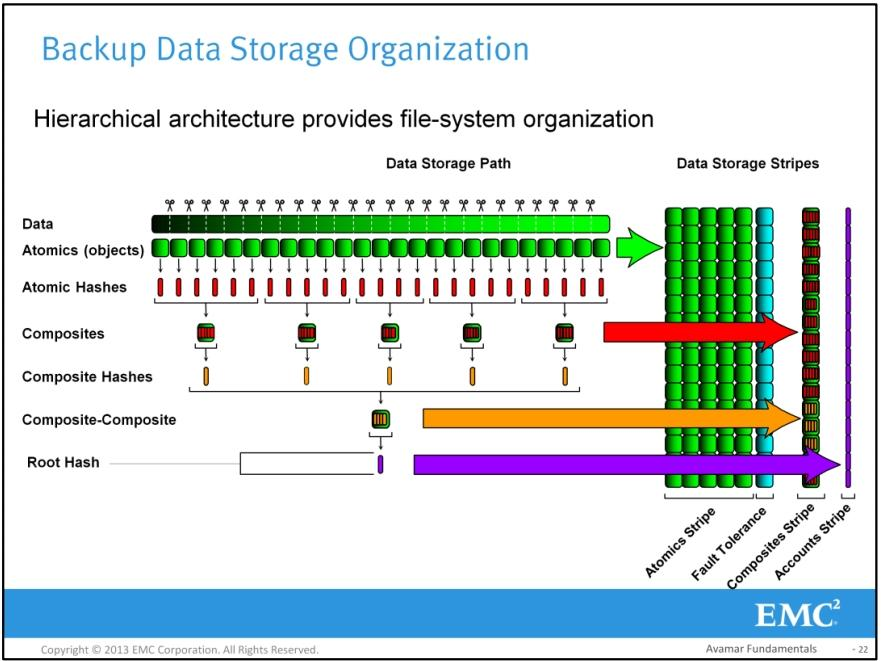

What are three types of EMC Avamar hashes?

- A. Composite, Root, and Atomic

- B. Root, Atomic, and Index

- C. Composite, Atomic, and Stripe

- D. Root, Atomic, and Parity

Answer : A

What is used by EMC Avamar to provide system-wide fault tolerance?

- A. RAID, RAIN, Checkpoints, and Replication

- B. RAID, RAIN, Checkpoints, and HFS check

- C. Asynchronous crunching, Parity, RAIN, and Checkpoints

- D. HFS check, RAIN, RAID, and Replication

Answer : A

The EMC Avamar client needs to backup a file and has performed sticky-byte factoring. This results in the following:

✑ Seven (7) chunks that will compress at 30%

✑ Four (4) that will compress at 23%

✑ Two (2) chunks at 50% compression

How many chunks will be compressed prior to hashing?

- A. 4

- B. 7

- C. 9

- D. 13

Answer : C

vamar, has a rather nifty technology called Sticky Byte Factoring which allows it to identify the changed information inside a file by breaking the file into variable length objects, this leads to much greater efficiency than fixed size approaches as changes made early in a fixed length sequence affect all subsequent blocks/ chunks/objects/whatever in that sequence. This in turn changes all the fingerprints taken following the changed data which means you end up with a lot of new blocks/chunks/objects/whatever even if the data really hasn't changed all that much. Sticky Byte Factoring on the other hand can tell what exactly has changed, not just that things have changed.